Podcast Episode Details

Back to Podcast Episodes

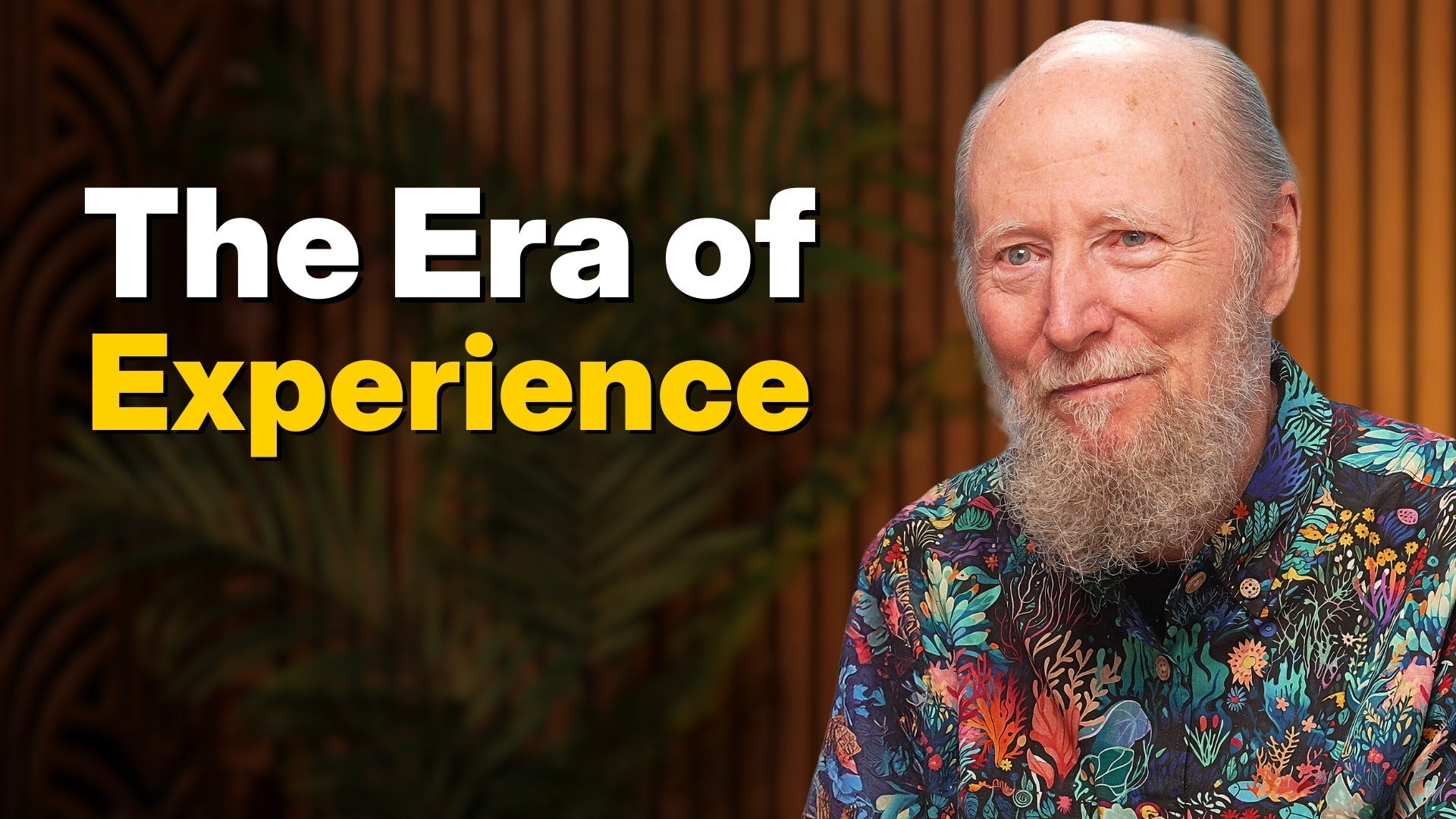

Richard Sutton – Father of RL thinks LLMs are a dead end

Richard Sutton is the father of reinforcement learning, winner of the 2024 Turing Award, and author of The Bitter Lesson. And he thinks LLMs are a dead end.

After interviewing him, my steel man of Richard’s position is this: LLMs aren’t capable of learning on-the-job, so no matter how much we scale, we’ll need some new architecture to enable continual learning.

And once we have it, we won’t need a special training phase — the agent will just learn on-the-fly, like all humans, and indeed, like all animals.

This new paradigm will render our current approach with LLMs obsolete.

In our interview, I did my best to represent the view that LLMs might function as the foundation on which experiential learning can happen… Some sparks flew.

A big thanks to the Alberta Machine Intelligence Institute for inviting me up to Edmonton and for letting me use their studio and equipment.

Enjoy!

Watch on YouTube; listen on Apple Podcasts or Spotify.

Sponsors

* Labelbox makes it possible to train AI agents in hyperrealistic RL environments. With an experienced team of applied researchers and a massive network of subject-matter experts, Labelbox ensures your training reflects important, real-world nuance. Turn your demo projects into working systems at labelbox.com/dwarkesh

* Gemini Deep Research is designed for thorough exploration of hard topics. For this episode, it helped me trace reinforcement learning from early policy gradients up to current-day methods, combining clear explanations with curated examples. Try it out yourself at gemini.google.com

* Hudson River Trading doesn’t silo their teams. Instead, HRT researchers openly trade ideas and share strategy code in a mono-repo. This means you’re able to learn at incredible speed and your contributions have impact across the entire firm. Find open roles at hudsonrivertrading.com/dwarkesh

Timestamps

(00:00:00) – Are LLMs a dead end?

(00:13:04) – Do humans do imitation learning?

(00:23:10) – The Era of Experience

(00:33:39) – Current architectures generalize poorly out of distribution

(00:41:29) – Surprises in the AI field

(00:46:41) – Will The Bitter Lesson still apply post AGI?

(00:53:48) – Succession to AIs

Get full access to Dwarkesh Podcast at www.dwarkesh.com/subscribe

Published on 3 months ago