Podcast Episode Details

Back to Podcast Episodes

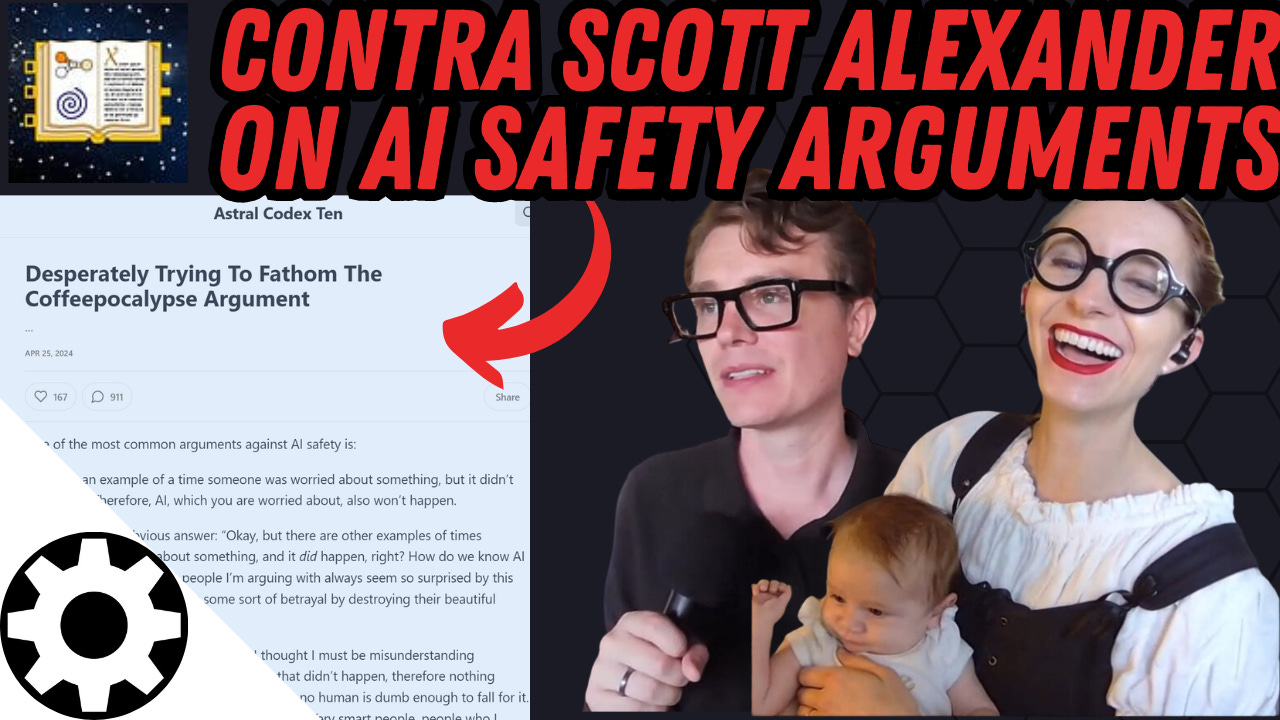

Contra Scott Alexander on AI Safety Arguments

In this thought-provoking video, Malcolm and Simone Collins offer a detailed response to Scott Alexander's article on AI apocalypticism. They analyze the historical patterns of accurate and inaccurate doomsday predictions, providing insights into why AI fears may be misplaced. The couple discusses the characteristics of past moral panics, cultural susceptibility to apocalyptic thinking, and the importance of actionable solutions in legitimate concerns. They also explore the rationalist community's tendencies, the pronatalist movement, and the need for a more nuanced approach to technological progress. This video offers a fresh perspective on AI risk assessment and the broader implications of apocalyptic thinking in society.

Malcolm Collins: [00:00:00] I'm quoting from him here, okay? One of the most common arguments against AI safety is, here's an example of a time someone was worried about something, but it didn't happen.

Therefore, AI, which you are worried about, also won't happen. I always give the obvious answer. Okay. But there are other examples of times someone was worried about something and it did happen, right? How do we know AI isn't more like those?

So specifically he is arguing against is every 20 years or so you get one of these apocalyptic movements. And this is why we're discounting this movement this is how he ends the article, so people know this isn't an attack piece, this is what he asked for in the article. He says, conclusion, I genuinely don't know what these people are thinking.

I would like to understand the mindset of people who make arguments like this, but I'm not sure I've succeeded. What is he missing according to you? He is missing something absolutely giant in everything that he's laid out.

And it is a very important point and it's very clear from his write up that this idea had just never occurred to him.

[00:01:00] Would you like to know more?

Malcolm Collins: Hello, Simone. I am excited to be here with you today. We today are going to be creating a video reply slash response to an argument that Scott Alexander, the guy who writes astral codex 10 or sleep star codex, depending on what era you were introduced to his content. Wrote about arguments against AI apocalypticism, which are based around it'll be clear when we get into the piece because I'm going to read some parts of it that no, I should know.

This is not a Scott Alexander is not smart or anything like that piece. We actually think Scott Alexander is incredibly intelligent and well meaning. And he is an intellectual who I consider a friend and somebody whose work I enormously respect. And I am creating this response because the piece is written in a way that actively requests [00:02:00] a response.

It's like, why do people believe this argument when I find it To be so weak, like one of those, what am I missing here? Kind of things. What am I missing here? Kind of things

he just clearly and I like the way he lays out his argument because it's very clear that, yes, there's a huge thing he's missing. And it's clear from his argument and the way that he thought about it that he's just literally never considered this point and it's why he doesn't understand this argument.

So we're going to go over his counter argument and we're going to go over the thing that he happens to be missing. And I'm quoting from him here, okay? One of the most common arguments against AI safety is, here's an example of a time someone was worried about something, but it didn't happen.

Therefore, AI, which you are worried about, also won't happen. I always give the obvious answer. Okay. But there are other examples of times someone was worried about something and it did happen, right? How do we know AI isn't more like those? The people I'm arguing with always seem [00:03:00] so surprised by this response, as if I'm committing some sort of betrayal by destroy

Published on 1 year, 6 months ago